Around the world in 80 Microamps: ESP32 and LoRa for low-power IoT – Christopher Biggs

- Promise of IOT

- Control everything

- Sensors everywhere

- Reduce cognitive load

- Problem

- Computers everywhere = wires everywhere

- Can’t be done every time

- But em if you got em

- eg Power over ethernet, Ethernet over power, Ethernet over coax

- Wifi is great for connections. But what about power?

- Batteries are bad

- Lead acid – obsolete just about everywhere

- Single-use dry cells – leak

- Nickel Metal-hydride – Some stuff

- Lithium – Everything else

- Sample battery

- Chap-stick battery = 2 Amp/hours

- 3.7 volts

- Labels on batteries often lie – you need to always verify

- Energy capacity is quoted for 20h discharge, not linear relationship

- But they are geting better due to phones, scooters, drones pushing

- Off the shelf solutions for packs

- Smart ones may turn themselves off if draw very low

- Cell plus simple system works

- Cell with “Battery Managmnet system” is a bit more complex

- Solar panels are useless, needs to be a4, a4, a5 size at least

- Linux systems too much draw for non-wired sensors, need to be used as hubs

- Computers can spend most of time asleep

- Config one or more wake-interupts

- Arduino deep sleep – Sleep consumption as low as 6 micro amps. ( 38 years with Chapstick battery)

- Watch out for stock voltage regulators (eats 10 mA)

- ESP 8266 Sleep modes

- Several levels off sleep modes

- Wake up every 5 minutes = 1 week battery life

- ESP 32

- Sleepier modes

- Complex sleep patterns.

- Ultra-low coprocessor

- 4 register, 10 instructions, 16-bit, special slow memory

- Can be configed to wake up at intervals

- Can go back to sleep or wake the main cores

- ULP in practice

- Write code, load into the ULP processor

- Enough code to decide to go back to sleep or wake up main processes

- Aim for efficiency

- Sleep as much as possible

- Use interrupts not polling where possible

- Nasty Surprises

- Simple resistors ladders leak power

- Linear regulators leak power

- Poor antennas cost watts

- Beware: USB programming bridges that are always on

- Almost all the off-the-shelf IoT boards are no good for permanent installation

- Solutions

- Turn of everything you are not using

- radio turn off when not in use, receiver turn it on now and then. Do store-and-forward

- slow down the cpu, turn off bluetooth

- Reduce brightness of lights

- BE careful about cutting out safety features

- Case Study – Smart water meter in multi-tenant building

- Existing meter has a physical rotating dial, can count rotations

- In cellar with no power

- Create own

- ESP-32

- Wifi for setup or maint

- LoRA for comms every 15 minutes

- ULP monitors 4 sensors

- ULP wakes CPU after number of elasped minutes and/or pulse

- Transmits to Linux-based hub covers building

- 150mA WiFi

- 100mA over LoRA

- 50mA when idle with radio on

- 40mA when idle with radio off

- 80uA in deep sleep

- Average under 1mA , lifetime = 1-5 years

- Recap

- Wires are hard

- Measure and understand usage

- The basics off deep-sleep

- ESP32 Ultra-low-Power co-processor

- Design your own battery-friendly systems (see Arts Miniconf presentation)

- Project and monitor your battery lifetime

- Website

Deep Learning, Not Deep Creepy – Jack Moffitt

- What is machine learning

- Make decisions based on statistics

- How is deep learning different?

- Many layers of neurons each learning more sophisticated representations of features in the data

- Transfer learning – reuse N-network for similar task where less training data

- Generative Adversarial networks

- The dark side of deep learning

- Works better with more data. Incentive for companies to get a huge amount of data

- Computationally very expensive – Creates incentive to move things to large clouds

- Inaccessible to smaller players

- Hard to debug, black boxes.

- Amplify biases in training data, somemays to fix but not generally fixable

- Data may be low quality

- Machine learning @mozilla

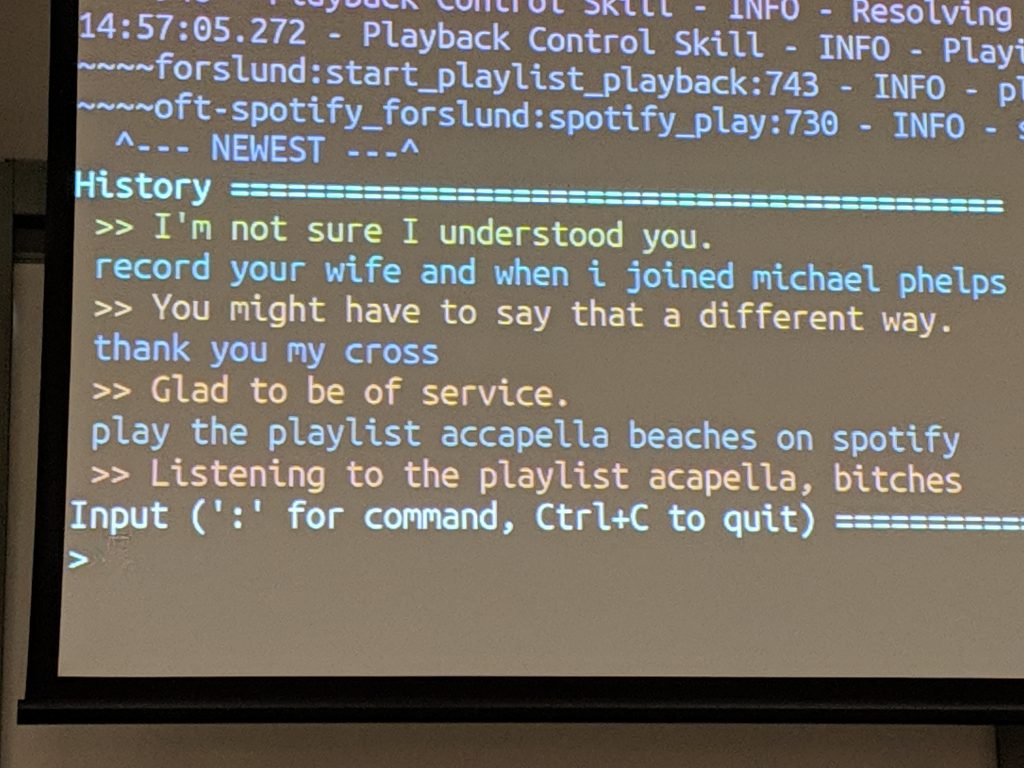

- Deepspeech and common voice

- Deepspeech – state-of-art speech detection

- Existing solutions owned by big companies. Costs $ and in cloud

- Opening up models and train data will allow innovation

- Based on baidu’s deep speech paper

- pre-trained models for english

- runs real-time on mobile

- word error rate of 6.48% on librivox

- streaming support

- Common voice

- Crowd source voice data for new applications

- 20 languages launched

- 1800 hours collected so far

- Deepproof – spelling and grammar checker

- Existing one is basically a keylogger ( Grammarly )

- Needs to be small enough to run on device

- Learn by example, rather than few rules

- Less language-specific tuning

- More scaleable

- Local interface to avoid sending private text to several

- 12 million 300-character chunks from wikipedia

- Inject plausible mistakes

- Real-life data

- Maybe improve with federated learning without disclosing text

- Lpcnet

- lots of test-to-speech are end-to-end

- a separate network converts spectrograms to audio

- GRiffin-Lim sounds bad

- WaveNet / WaveRNN needs 10s GFLOPS

- needs something for efficient for on-device

- Currently 1.5-6 GFLOPS

- real-time on mobile

- Works okay

- Other applications

- Speech compression

- noise compression

- Questions:

- Does the audio slow-down work on non-speech? Not really

- How do you deal with region variations of speech and grammar – Common voice is collecting