The Vulkan Graphics API, what it means for Linux – David Airlie

- What is Vulkan

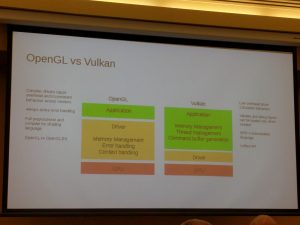

- Not OpenGL++

- From Scratch, Low Level, Open Graphics API

- Stack

- Loader (Mostly just picks the driver)

- Layers (sometimes optional) – Seperate from the drivers.

- Validation

- Application Bug fixing

- Tracing

- Default GPU selection

- Drivers (ICDs)

- Open Source test Suite. ( “throw it over the wall Open Source”)

- Why a new 3D API

- OpenGL is old, from 1992

- OpenGL Design based on 1992 hardware model

- State machine has grown a lot as hardware has changed

- Lots of stuff in it that nobody uses anymore

- Some ideas were not so good in retrospec

- Single context makes multi-threading hard

- Sharing context is not reliable

- Orientated around windows, off-screen rendering is a bolt-on

- GPU hardware has converged to just 3-5 vendors with similar hardware. Not as much need to hid things

- Vulkan moves a lot of stuff up to the application (or more likely the OS graphics layer like Unity)

- Vulkan gives applications access to the queues if they want them.

- Shading Language – SPIR-V

- Binary formatted, seperate from Vulkan, also used by OpenGL

- Write Shaders HSL or GLSL and they get converted to SPIR-V

- Driver Development

- Almost all Error checking needed since done on the validation layer

- Simpler to explicitly build command stream and then submit

- Linux Support

- Closed source Drivers

- Nvidia

- AMD (amdgpu-pro) – promised open source “real soon now … a year ago”

- Open Source

- Intel Linux (anv) –

- on release day. 3.5 people over 8 months

- SPIR -> NIR

- Vulkan X11/Wayland WSI

- anv Vulkan <– Core driver, not sharable

- NIR -> i965 gen

- ISL Library (image layout/tiling)

- radv (for AMD GPUs)

- Dave has been working on it since early July 2016 with one other guy

- End of September Doom worked.

- One Benchmark faster than AMD Driver

- Valve hired someone to work on the driver.

- Similar model to Intel anv driver.

- Works on the few Vulkan games, working on SteamVR

- Intel Linux (anv) –

- Closed source Drivers

Building reliable Ceph clusters – Lars Marowsky-Brée

- Ceph

- Storage Project

- Multiple front ends (S3, Swift, Block IO, iSCSI, CephFS)

- Built on RADOS data store

- Software Defined Storage

- Commodity servers + ceph + OS + Mngt (eg Open Attic)

- Makes sense at 4+ servers with 10 drives each

- metadata servce

- CRUSH algorithm to speread out the data, no centralised table (client goes directly to data)

- Access Methods

- Use only what you need

- RADOS Block devices <– most stable

- S3 (or Swift) via RadosGW <– Mature

- CephFS <— New and pretty stable , avoid stuff non meta-data intensive

- Introducing Dependability

- Availability

- Reliability

- Duribility

- Safety

- Maintainability

- Most outages are caused by Humans

- At Scale everything fails

- The Distributed systems are still vulnerable to correlated failures (eg same batch of hard drives)

- Advantages of Heterogeneity – Everything is broken different

- Homogeneity is non-sustainable

- Failure is inevitable; suffering is optional

- Prepare for downtime

- Test if system meets your SLA when under load and when degraded and during recovery

- How much available do you need?

- An extra nine will double your price

- A Bag full of suggestions

- Embrace diversity

- Auto recovery requires a >50% majority

- 3 suppliers?

- Mix arch and stuff between racks/pods and geography

- Maybe you just go with manually added recovery

- Hardware Choices

- Vendors have reference archetectures

- Hard to get vendors to mix, they don’t like that and fewer docs.

- Hardware certification reduces the risk

- Small variations can have huge impact

- Customer bought network card and switch one up from the ref architecture. 6 months of problems till firmware bug fixed.

- How many monitors do I need?

- Not performance critcal

- 3 is usually enough as long as well distributed

- Big envs maybe 5 or 7

- Don’t coverge (VMs) these with other types of nodes

- Storage

- Avoid Desktop Disks and SSDs

- Storage Node sizing

- A single node should not be more than 10% of your capacity

- You need space capacity at least as big as a single node (to recover after fail)

- Durability

- Erasure Encode more durabily and high percentage of disk used

- But recovery a lot slower, high overhead, etc

- Different strokes for different pools

- Network cards, different types, cross connect, use last years cards

- Gateways: tests okay under failure

- Config drift: Use config mngt (puppet etc)

- Monioring

- Perf as system ages

- SSD degradation

- Updates

- Latest software is always the best

- Usually good to update

- Can do rolling upgrades

- But still test a little on a staging server first

- Always test on your system

- Don’t trust metrics from vendors

- Test updates

- test your processes

- Use OS to avoid vendor lock in

- Disaster will strike

- Have backups and test them and recoveries

- Avoid Complexity

- Be aggressive in what you test

- Be commiserative in what you deploy only what you need

- Q: Minimum size?

- A: Not if you can fit on a single server

- Embrace diversity